使用kubeadm在CentOS 7上安装Kubernetes 1.8

1. 系统配置

1.1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

1.2 禁用SELinux

setenforce 0

编辑文件/etc/selinux/config,将SELINUX修改为disabled,如下:

SELINUX=disabled

1.3 关闭系统Swap

Kubernetes 1.8开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动。方法一,通过kubelet的启动参数–fail-swap-on=false更改这个限制。方法二,关闭系统的Swap。

swapoff -a

修改/etc/fstab文件,注释掉SWAP的自动挂载,使用free -m确认swap已经关闭。

2. 安装Docker

注: 所有节点均需执行该步骤。

2.1 下载Docker安装包

- 下载地址:https://download.docker.com/linux/centos/7/x86_64/stable/Packages/

- 下载安装包:

mkdir ~/k8s

cd k8s

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

wget https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

2.2 安装Docker

cd k8s

yum install ./docker-ce-selinux-17.03.2.ce-1.el7.centos.noarch.rpm

yum install ./docker-ce-17.03.2.ce-1.el7.centos.x86_64.rpm

systemctl enable docker

systemctl start docker

2.3 配置Docker

- 开启iptables filter表的FORWARD链

编辑/lib/systemd/system/docker.service,在ExecStart=..上面加入如下内容:

ExecStartPost=/usr/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

如下:

......

ExecStartPost=/usr/sbin/iptables -I FORWARD -s 0.0.0.0/0 -j ACCEPT

ExecStart=/usr/bin/dockerd

......

- 配置Cgroup Driver

创建文件/etc/docker/daemon.json,添加如下内容:

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- 重启Docker服务

systemctl daemon-reload && systemctl restart docker && systemctl status docker

3. 安装Kubernetes

3.1 安装kubeadm、kubectl、kubelet

- 配置软件源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

- 解决路由异常

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

- 调整swappiness参数

修改/etc/sysctl.d/k8s.conf添加下面一行:

vm.swappiness=0

执行sysctl -p /etc/sysctl.d/k8s.conf使修改生效。

- 安装kubeadm、kubectl、kubelet

① 查看可用版本

yum list --showduplicates | grep 'kubeadm\|kubectl\|kubelet'

② 安装指定版本

yum install kubeadm-1.8.1 kubectl-1.8.1 kubelet-1.8.1

systemctl enable kubelet

systemctl start kubelet

3.2 使用kubeadm init初始化集群

注:该小节仅在Master节点上执行

- 初始化Master节点

kubeadm init --kubernetes-version=v1.8.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=master.k8s.samwong.im

- 配置普通用户使用kubectl访问集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 查看集群状态

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

- 初始化失败清理命令

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

3.3 安装Pod Network

注:该小节仅在Master节点上执行

- 安装Flannel

[root@master ~]# cd ~/k8s

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created

- 指定网卡

如果有多个网卡,需要在kube-flannel.yml中使用–iface参数指定集群主机内网网卡的名称,否则可能会出现dns无法解析。需要将kube-flannel.yml下载到本地,flanneld启动参数加上–iface=。

......

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds

......

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.9.0-amd64

command: [ "/opt/bin/flanneld", "--ip-masq", "--kube-subnet-mgr", "--iface=eth1" ]

......

- 查询Pod状态

kubectl get pod --all-namespaces -o wide

3.4 Master节点参与工作负载

使用kubeadm初始化的集群,出于安全考虑Pod不会被调度到Master Node上,可使用如下命令使Master节点参与工作负载。

kubectl taint nodes node1 node-role.kubernetes.io/master-

3.5 向Kubernetes集群添加Node

- 查看master的token

kubeadm token list | grep authentication,signing | awk '{print $1}'

- 查看discovery-token-ca-cert-hash

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

- 添加节点到Kubernetes集群

kubeadm join --token=a20844.654ef6410d60d465 --discovery-token-ca-cert-hash sha256:0c2dbe69a2721870a59171c6b5158bd1c04bc27665535ebf295c918a96de0bb1 master.k8s.samwong.im:6443

- 查看集群中的节点

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master.k8s.samwong.im Ready master 1d v1.8.1

3.6 从Kubernetes集群中移除节点

- Master节点操作

kubectl drain master.k8s.samwong.im --delete-local-data --force --ignore-daemonsets

kubectl delete node master.k8s.samwong.im

- Node节点操作

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

- 查看集群节点

kubectl get nodes

3.7 部署Dashboard插件

- 下载Dashboard插件配置文件

cd ~/k8s

wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

- 修改Dashboard Service

编辑kubernetes-dashboard.yaml文件,在Dashboard Service中添加type: NodePort,暴露Dashboard服务。

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

- 安装Dashboard插件

kubectl create -f kubernetes-dashboard.yaml

- Dashboard账户集群管理权限

创建一个kubernetes-dashboard-admin的ServiceAccount并授予集群admin的权限,创建kubernetes-dashboard-admin.rbac.yaml。

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

执行命令:

[root@master ~]# kubectl create -f kubernetes-dashboard-admin.rbac.yaml

serviceaccount "kubernetes-dashboard-admin" created

clusterrolebinding "kubernetes-dashboard-admin" created

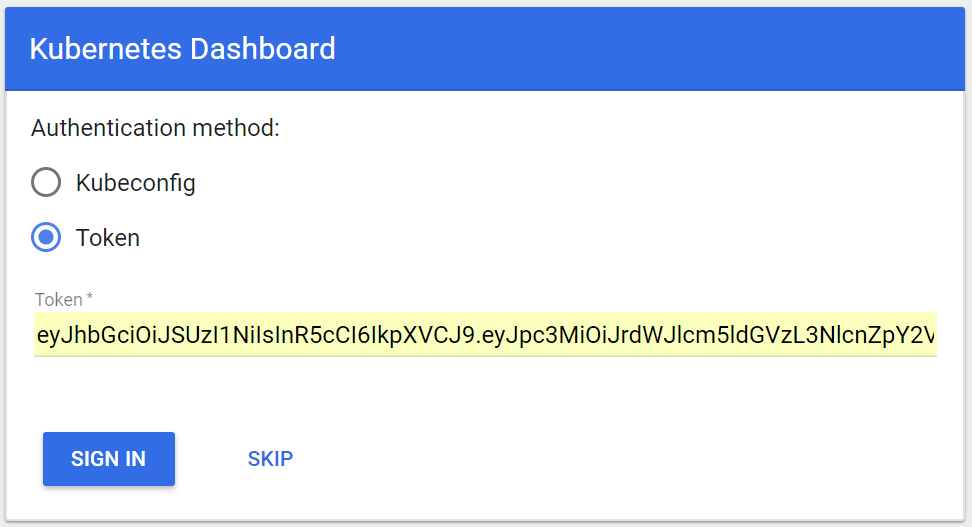

- 查看kubernete-dashboard-admin的token

[root@master ~]# kubectl -n kube-system get secret | grep kubernetes-dashboard-admin

kubernetes-dashboard-admin-token-jxq7l kubernetes.io/service-account-token 3 22h

[root@master ~]# kubectl describe -n kube-system secret/kubernetes-dashboard-admin-token-jxq7l

Name: kubernetes-dashboard-admin-token-jxq7l

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name=kubernetes-dashboard-admin

kubernetes.io/service-account.uid=686ee8e9-ce63-11e7-b3d5-080027d38be0

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1qeHE3bCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjY4NmVlOGU5LWNlNjMtMTFlNy1iM2Q1LTA4MDAyN2QzOGJlMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.Ua92im86o585ZPBfsOpuQgUh7zxgZ2p1EfGNhr99gAGLi2c3ss-2wOu0n9un9LFn44uVR7BCPIkRjSpTnlTHb_stRhHbrECfwNiXCoIxA-1TQmcznQ4k1l0P-sQge7YIIjvjBgNvZ5lkBNpsVanvdk97hI_kXpytkjrgIqI-d92Lw2D4xAvHGf1YQVowLJR_VnZp7E-STyTunJuQ9hy4HU0dmvbRXBRXQ1R6TcF-FTe-801qUjYqhporWtCaiO9KFEnkcYFJlIt8aZRSL30vzzpYnOvB_100_DdmW-53fLWIGYL8XFnlEWdU1tkADt3LFogPvBP4i9WwDn81AwKg_Q

ca.crt: 1025 bytes

- 查看Dashboard服务端口

[root@master k8s]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 1d

kubernetes-dashboard NodePort 10.102.209.161 <none> 443:32513/TCP 21h

- 访问Dashboard

打开浏览器输入https://192.168.56.2:32513,如下:

3.8 部署heapster插件

安装Heapster为集群添加使用统计和监控功能,为Dashboard添加仪表盘。

mkdir -p ~/k8s/heapster

cd ~/k8s/heapster

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/grafana.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/rbac/heapster-rbac.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/heapster.yaml

wget https://raw.githubusercontent.com/kubernetes/heapster/master/deploy/kube-config/influxdb/influxdb.yaml

kubectl create -f ./

4. 遇到的问题

4.1 使用代理科学上网

- 没有代理

可申请AWS免费账户,创建EC2实例,搭建Shadowsocks服务器。 -

配置代理客户端

参考链接:https://www.zybuluo.com/ncepuwanghui/note/954160 -

配置Docker代理

① 创建docker服务配置文件

mkdir -p /etc/systemd/system/docker.service.d

② 编辑vi /etc/systemd/system/docker.service.d/http-proxy.conf,添加如下内容:

[Service] "NO_PROXY=localhost,*.samwong.im,192.168.0.0/16,127.0.0.1,10.244.0.0/16"

③ 编辑/etc/systemd/system/docker.service.d/https-proxy.conf,添加如下内容:

[Service] "NO_PROXY=localhost,*.samwong.im,192.168.0.0/16,127.0.0.1,10.244.0.0/16"

④ 重启Docker服务

systemctl daemon-reload && systemctl restart docker

⑤ 查看是否配置成功

[root@master k8s]# systemctl show --property=Environment docker | more

Environment=HTTP_PROXY=http://master.k8s.samwong.im:8118 NO_PROXY=localhost,*.samwong.im,192.168.0.0/16,127.0.0.1,10.244.0.0/16 HTTPS_PROXY=https://master.k8

s.samwong.im:8118

- 配置yum代理

① 编辑/etc/yum.conf文件,追加如下内容:

proxy=http://master.k8s.samwong.im:8118

② 更新yum缓存

yum makecache

- 配置wget代理

编辑/etc/wgetrc文件,追加如下内容:

ftp_proxy=http://master.k8s.samwong.im:8118

http_proxy=http://master.k8s.samwong.im:8118

https_proxy=http://master.k8s.samwong.im:8118

- 配置全局代理

如需上网,可编辑/etc/profile文件,追加如下内容:

PROXY_HOST=master.k8s.samwong.im

export all_proxy=http://$PROXY_HOST:8118

export ftp_proxy=http://$PROXY_HOST:8118

export http_proxy=http://$PROXY_HOST:8118

export https_proxy=http://$PROXY_HOST:8118

export no_proxy=localhost,*.samwong.im,192.168.0.0/16.,127.0.0.1,10.10.0.0/16

注: 部署Kubernetes时需禁用全局代理,会导致访问内部服务失败。

4.2 下载软件包和镜像

- 下载kubeadm、kubectl、kubelet

wget https://storage.googleapis.com/kubernetes-release/release/v1.8.1/bin/linux/amd64/kubeadm

wget https://storage.googleapis.com/kubernetes-release/release/v1.8.1/bin/linux/amd64/kubectl

wget https://storage.googleapis.com/kubernetes-release/release/v1.8.1/bin/linux/amd64/kubelet

参考链接:https://kubernetes.io/docs/tasks/tools/install-kubectl/#install-kubectl-binary-via-curl

4.3 推送本地镜像到镜像仓库

- 上传镜像

docker login -u xxxxxx@163.com -p xxxxxx hub.c.163.com

docker tag gcr.io/google_containers/kube-apiserver-amd64:v1.8.1 hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1

docker push hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1

docker rmi hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1

docker logout hub.c.163.com

- 下载镜像

docker pull hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1

docker tag hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1 gcr.io/google_containers/kube-apiserver-amd64:v1.8.1

docker rmi hub.c.163.com/xxxxxx/kube-apiserver-amd64:v1.8.1

docker logout hub.c.163.com

- 更新镜像

docker update --restart=no $(docker ps -q) && docker stop $(docker ps -q) && docker rm $(docker ps -q)

4.4 kubeadm init错误

- 错误描述

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "nodes is forbidden: User \"system:anonymous\" cannot list nodes at the cluster scope",

"reason": "Forbidden",

"details": {

"kind": "nodes"

},

"code": 403

}

-

问题原因

该节点在/etc/profile中配置了全局代理,kubectl访问kube-apiserver也通过代理转发请求,导致证书不对,连接拒绝。 -

解决方法

取消全局代理,只配置Docker代理、yum代理、wget代理。

参考4.1。

4.5 向Kubernetes集群添加Node失败

- 问题描述

在Node上使用kubeadm join命令向kubernetes集群添加节点时提示Failed,如下:

kubeadm join --token=a20844.654ef6410d60d465 --discovery-token-ca-cert-hash sha256:0c2dbe69a2721870a59171c6b5158bd1c04bc27665535ebf295c918a96de0bb1 master.k8s.samwong.im:6443

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "master.k8s.samwong.im:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://master.k8s.samwong.im:6443"

[discovery] Failed to request cluster info, will try again: [Get https://master.k8s.samwong.im:6443/api/v1/namespaces/kube-public/configmaps/cluster-info: EOF]

- 问题原因

token失效被删除。在Master上查看token,结果为空。

kubeadm token list

- 解决方法

重新生成token,默认token有效期为24小时,生成token时通过指定–ttl 0可设置token永久有效。

[root@master ~]# kubeadm token create --ttl 0

3a536a.5d22075f49cc5fb8

[root@master ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

3a536a.5d22075f49cc5fb8 <forever> <never> authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

标签云

-

CDNDockerSVN部署GITFlutterVirtualminFlaskWgetSupervisorSwarmGolangDNSVPSDeepinSnmpPuttyVagrantLVMSaltStackKotlinInnoDBPHP代理服务器Ubuntu备份Redhat监控iPhoneCrontabWindowsNginx缓存YumDebianSwiftMongodbIptablesShellRsyncCactiCurlAndroidAnsibleTomcatLUAMemcacheOpenVZJenkinsMySQLKubernetesFirewalldHAproxyWordPressPostgreSQL集群SecureCRTKVMSSHSocketRedis容器CentosTensorFlowVirtualboxApacheSambaZabbixOpenStackKloxoWPSVsftpdPostfixPythonAppleOfficeGoogleMariaDBIOSTcpdumpOpenrestyLighttpdsquidLinuxNFSMacOSSQLAlchemyBashSystemdWireshark